In the world of programming and computer science, understanding data types is crucial for efficient coding. One of the most fundamental data types is the integer, commonly referred to as “int.” But how many bytes does an int actually consume? This seemingly simple question can have different answers depending on the programming language and architecture in use.

Typically, an int occupies a specific number of bytes, but variations exist across systems. For instance, in a 32-bit architecture, an int usually takes up 4 bytes, while in a 64-bit system, it might still remain 4 bytes but can also be influenced by compiler settings. Grasping these details not only enhances programming skills but also helps in optimizing memory usage and performance.

How Many Bytes In Int

Data types define the kind of data a variable can hold in programming. Each type allocates memory differently, influencing performance and memory consumption.

Common Data Types

- Integers: Whole numbers without fractions. Typically, integers consume 4 bytes in a 32-bit architecture and often remain 4 bytes in a 64-bit architecture.

- Floating Point: Numbers containing decimals. Commonly, a float takes up 4 bytes, and a double occupies 8 bytes.

- Characters: Single symbols or letters. Characters generally require 1 byte, while wide characters may use 2 bytes or more, depending on the encoding.

- Boolean: Represents true or false. A boolean usually takes 1 byte, although some compilers may optimize it further.

Size Variation

The size of a data type can vary based on system architecture and compiler settings. Understanding these nuances provides confidence in selecting the appropriate type for a specific application. Moreover, recognizing how data types interact with one another can facilitate better memory management strategies and performance optimizations in programming tasks.

Understanding Integers

Integers, or “int” types, represent whole numbers without fractions. Their size can impact performance and memory allocation in programming.

Definition of Integers

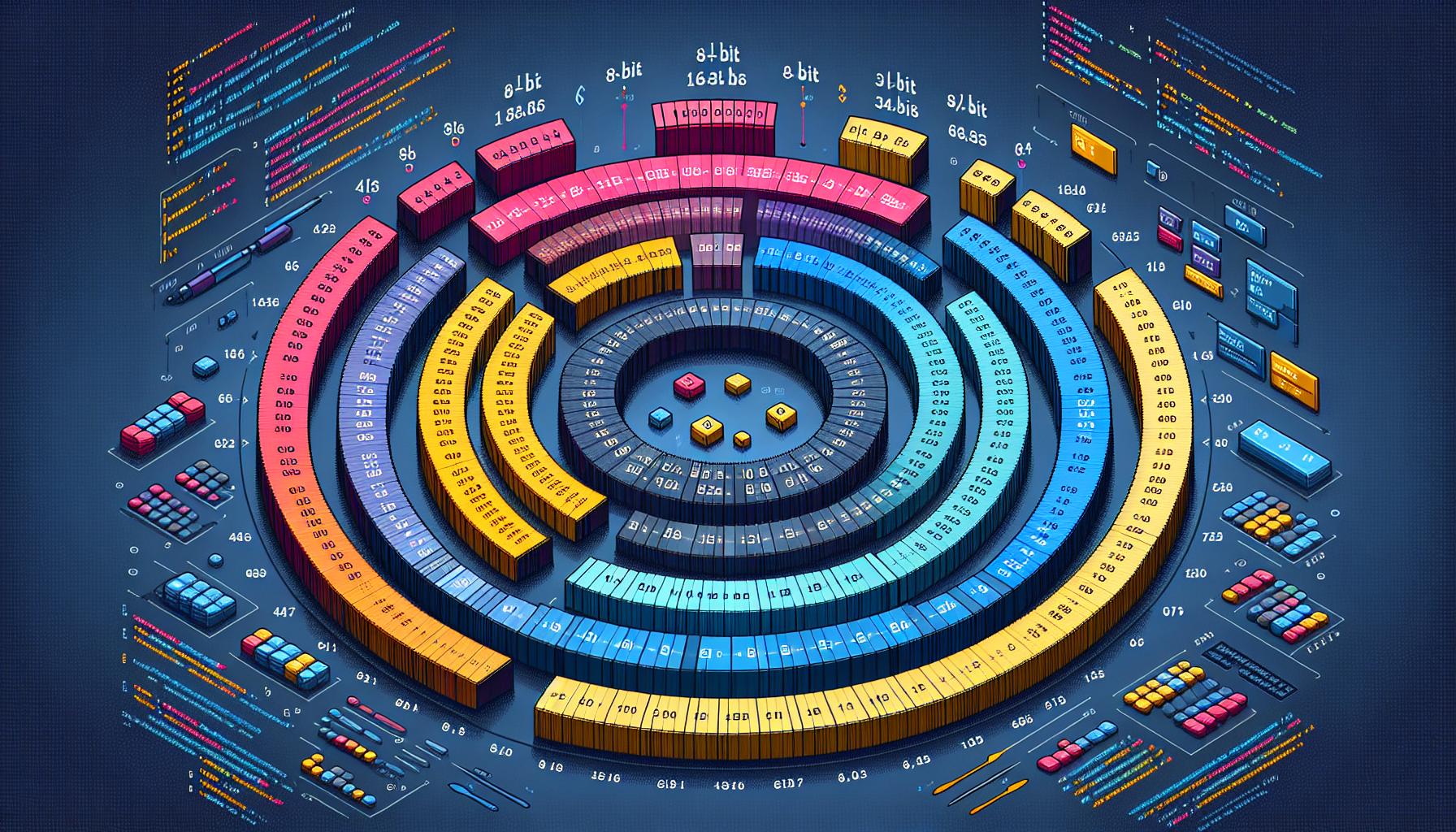

Integers are data types designed to store whole numbers. They typically range from negative to positive values. Common ranges depend on the integer bit size, such as:

- 8-bit Integer: Ranges from -128 to 127

- 16-bit Integer: Ranges from -32,768 to 32,767

- 32-bit Integer: Ranges from -2,147,483,648 to 2,147,483,647

- 64-bit Integer: Ranges from -9,223,372,036,854,775,808 to 9,223,372,036,854,775,807

The specific range can influence how integers are handled during computations, making it crucial for programmers to select appropriate sizes for variables based on requirements.

Importance of Integer Size

Understanding integer size matters for resource management and application performance. Smaller integer types occupy less memory, beneficial in large arrays or data sets. For instance:

- 32-bit Int: Occupies 4 bytes, optimal for standard operations in many applications.

- 64-bit Int: Occupies 8 bytes, suitable for extensive calculations requiring larger value ranges.

Choosing the correct integer size aligns with memory efficiency and program speed, minimizing overhead and preventing overflow errors. This ensures effective data manipulation throughout coding tasks.

How Many Bytes in Int?

The number of bytes an int occupies can vary based on the programming language and system architecture. Typically, int data types consume either 4 or 8 bytes depending on specific conditions.

Standard Sizes Across Languages

Most programming languages define int sizes as follows:

- C/C++: Generally, 4 bytes.

- Java: Consistently 4 bytes across all platforms.

- Python: Dynamically sized; effectively 4 bytes on 32-bit systems and 8 bytes on 64-bit systems.

- C#: Standardly 4 bytes.

- JavaScript: Internally uses a 64-bit double-precision format, but treats numbers as 64-bit floats.

These examples illustrate the common standard sizes, but exceptions occur based on compiler settings and specific language implementations.

Variations in Different Architectures

Architecture influences int size significantly:

- 32-bit Architecture: Typically uses 4 bytes for int types.

- 64-bit Architecture: Often maintains 4 bytes for int types, but compilers and configurations might allow for 8 bytes.

Understanding these architectural variations helps optimize memory usage and ensure efficient performance in programming tasks.

Factors Influencing Integer Size

Integer size can vary significantly due to multiple factors, including compiler settings and operating system differences. Understanding these influences allows programmers to make informed decisions regarding data type selection.

Compiler Settings

Compiler settings directly affect how many bytes an int occupies. Different compilers may implement integer sizes differently based on optimization levels or target architectures. For example, certain compilers allow developers to select specific data type sizes (e.g., int32_t for 4 bytes or int64_t for 8 bytes) through predefined macros. This flexibility results in variations in how much memory an int consumes, even within the same programming language.

Operating System Differences

Operating system differences also impact integer size. Most contemporary operating systems, such as Windows, macOS, and Linux, use conventions that can determine integer size based on the architecture of the hardware and the application’s compilation process. For instance, a 32-bit Windows application typically allocates 4 bytes for an int, while a 64-bit system may maintain this size but provides options for extended integer sizes depending on the use case. Additionally, the way an operating system handles memory allocation can affect performance and efficiency, further guiding programmers in selecting appropriate integer types for their applications.

Practical Implications

Understanding the implications of integer byte sizes leads to better memory management and enhanced performance in programming. Specific considerations include memory allocation and execution efficiency.

Memory Management

Memory management involves optimizing the use of memory resources. Choosing the appropriate integer size directly affects memory allocation.

- Selecting smaller integer types, such as 8-bit or 16-bit, can conserve memory, particularly for large data sets. For example, a large array of 8-bit integers uses significantly less memory than an array of 32-bit integers.

- Understanding the minimum and maximum ranges of integers helps prevent overflow errors. Using a larger data type when needed mitigates risks of data loss due to insufficient space.

- Techniques like bit packing enable efficient storage of data by combining multiple smaller integers into a single larger structure, maximizing available memory.

Performance Considerations

Performance considerations focus on execution efficiency and speed. The size of integers influences both processing time and computational resource consumption.

- Smaller integers require less time for processing compared to larger integers. For instance, operations on 32-bit integers typically execute faster than those on 64-bit integers due to reduced workload.

- Memory access patterns play a critical role in performance. Using smaller data types can improve cache performance, leading to faster data retrieval and manipulation.

- Compiler optimizations may favor specific integer sizes, enhancing execution speed based on architecture. Using types like int32_t and int64_t enables developers to fine-tune the balance between memory usage and performance efficiency.

Understanding how many bytes an int occupies is critical for programmers aiming to optimize their applications. The nuances of integer sizes across different programming languages and architectures can significantly impact memory usage and performance. By selecting the right integer type based on specific needs and system requirements, developers can enhance efficiency and prevent potential issues like overflow errors.

Incorporating best practices in data type selection not only conserves memory but also improves processing speed. With the right knowledge, programmers can make informed decisions that lead to better resource management and ultimately more efficient code.