In the world of computing, understanding data sizes is crucial. Bits and bytes are the fundamental units of digital information, and they play a significant role in how data is processed and stored. As technology advances, knowing the relationship between these units becomes increasingly important, especially for those working in tech or simply curious about how their devices function.

When it comes to 64 bits, a common question arises: how many bytes does that equate to? This seemingly simple query opens the door to a deeper understanding of data representation in computers. By grasping the connection between bits and bytes, individuals can better appreciate the intricacies of digital systems and make informed decisions about technology use.

How Many Bytes Is 64 bits

Bits and bytes represent essential building blocks of digital information. Understanding their definitions and relationships aids in comprehending how data is processed and stored in computing systems.

Definition of Bits

Bits are the smallest unit of data in computing, denoted as either a 0 or a 1. Each bit represents a binary digit, conveying simple on-off states. Bits serve as the foundation for all forms of digital communication and data representation.

Definition of Bytes

Bytes consist of eight bits, functioning as a fundamental unit for data measurement. A byte can represent a wide range of values, typically character encoding in text files, such as ASCII. The byte serves as a standard measure when discussing memory sizes and file storage, making it crucial for understanding data capacity thresholds.

The Relationship Between Bits and Bytes

Understanding the relationship between bits and bytes is crucial in the realm of computing. Bits and bytes serve as the building blocks of digital data, and knowing how to convert between them provides clarity in data size measurement.

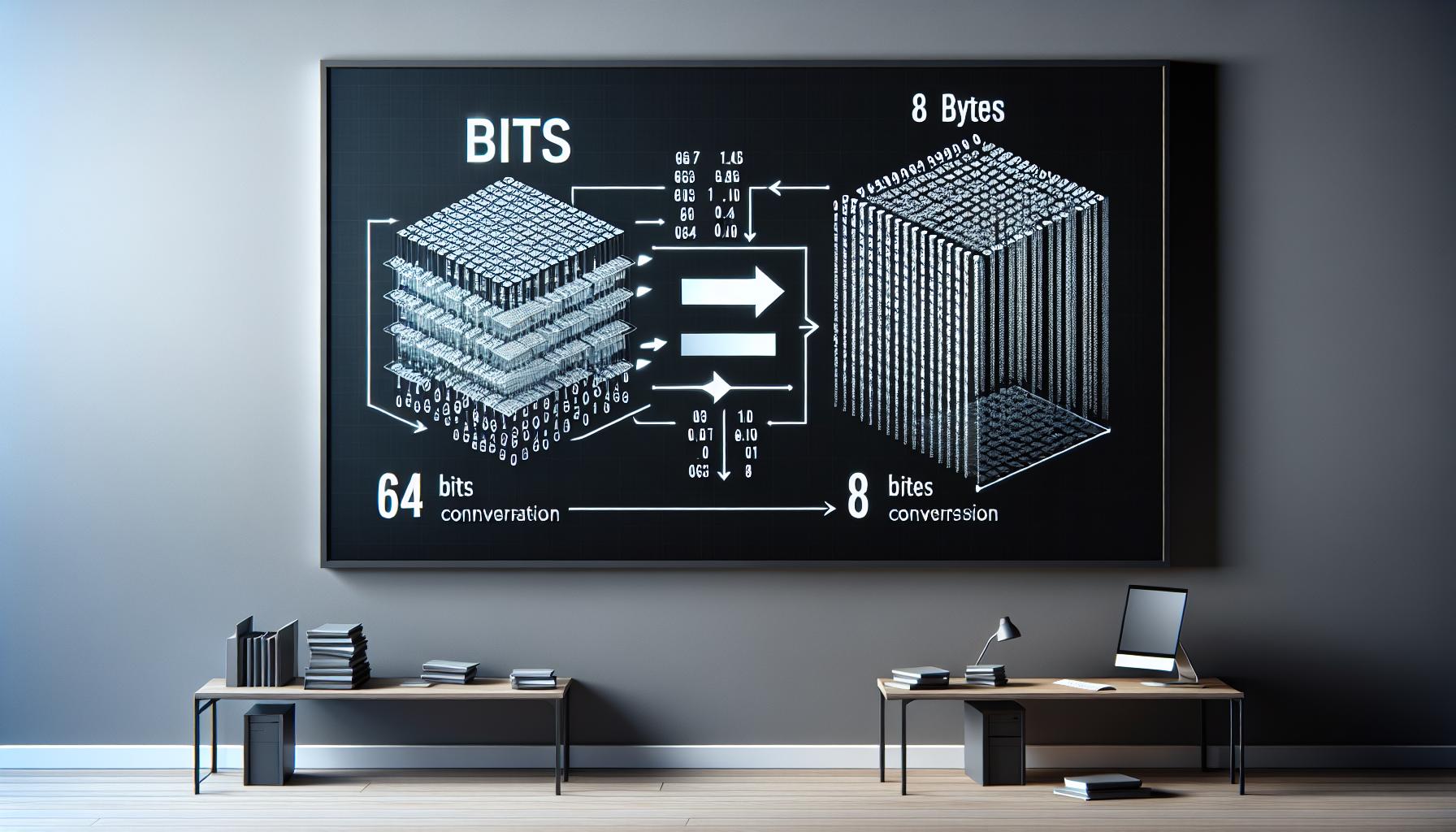

Conversion Formula

The conversion between bits and bytes follows a straightforward formula. One byte equals eight bits. Thus, to convert bits to bytes, divide the number of bits by eight. This basic calculation applies universally, facilitating the conversion of various data sizes.

Examples of Conversion

Real-world examples illustrate how this conversion works:

- 64 bits: 64 bits ÷ 8 bits/byte = 8 bytes

- 16 bits: 16 bits ÷ 8 bits/byte = 2 bytes

- 32 bits: 32 bits ÷ 8 bits/byte = 4 bytes

- 128 bits: 128 bits ÷ 8 bits/byte = 16 bytes

These examples demonstrate the consistent relationship between bits and bytes, reinforcing the ease of calculating data sizes in different contexts.

Calculating Bytes from 64 Bits

Understanding the conversion from bits to bytes is crucial in computing. 64 bits can be translated effectively into bytes using a simple formula.

Step-by-Step Calculation

To convert bits into bytes, divide the number of bits by 8. For 64 bits, the calculation is straightforward:

- Identify the number of bits: 64 bits.

- Apply the conversion formula: 64 bits ÷ 8 bits/byte = 8 bytes.

This result indicates that 64 bits equal 8 bytes. Each step in this process reinforces the direct relationship between bits and bytes, reiterating that 8 bits combine to form a single byte.

Practical Applications

Knowing how to convert bits to bytes proves essential in various contexts within technology. Below are some practical applications:

- Memory Allocation: Understanding data sizes allows for effective management of memory resources in applications.

- File Storage: Knowing file sizes in bytes aids in determining storage requirements in systems and drives.

- Data Transfer Rates: Analyzing bandwidth often involves converting bits to bytes for clarity in communication speeds.

- Programming: Developers rely on these conversions to optimize code and ensure efficient data handling.

These applications exemplify the importance of converting 64 bits into 8 bytes, impacting computing tasks ranging from simple data storage to complex software development.

Implications of Understanding Bytes and Bits

Understanding bytes and bits significantly influences various aspects of computing. Knowledge of these units enhances effective technology use, especially in managing data representation and storage.

Importance in Computing

Understanding bits and bytes is crucial in computing. Bits serve as the smallest unit of data, determining the digital representation of information. Bytes, comprising eight bits, facilitate data measurement, storage, and transmission. This knowledge aids in programming, ensuring efficient code and optimal performance. Additionally, it empowers individuals to comprehend specifications of hardware, such as memory capacity and processor performance.

Impact on Data Storage

Data storage relies heavily on the understanding of bytes and bits. Storage capacities are typically measured in bytes, making it essential to convert bits to bytes accurately. For instance, knowing that 64 bits equal 8 bytes plays a key role in evaluating file sizes and memory requirements. Accurate calculations enable efficient storage management, lead to optimal use of resources, and influence decisions regarding hardware upgrades. Understanding these concepts ultimately enhances overall data handling practices within digital environments.

Understanding the relationship between bits and bytes is crucial for anyone navigating the digital landscape. Knowing that 64 bits equals 8 bytes simplifies data size calculations and enhances one’s grasp of how devices operate. This knowledge is particularly valuable in fields like programming and data management where efficiency and accuracy matter.

As technology continues to evolve, being informed about these fundamental units empowers individuals to make better decisions regarding hardware and software. Whether it’s optimizing memory usage or evaluating file sizes, a solid understanding of bits and bytes lays the groundwork for effective technology management.